Bud Ecosystem has unveiled Bud Runtime, a Generative AI serving and inference optimization software stack designed to deliver high-performance results across diverse hardware and operating systems. With its flexible deployment capabilities, Bud Runtime enables businesses to deploy production-ready Generative AI solutions on CPUs, HPUs, NPUs, and GPUs.

As the demand for generative AI grows, businesses are encountering several obstacles when trying to adopt GenAI-based solutions. These include the high cost of GPUs, long wait times for acquiring hardware, and a lack of in-house technical expertise. Additionally, achieving the same level of performance with on-premises solutions as compared to cloud-based services remains challenging. Hardware dependencies also limit experimentation and innovation, making it harder for businesses to explore and implement new GenAI applications effectively.

Bud Runtime addresses these challenges by offering a solution that significantly improves inference performance while reducing costs. The platform promises up to 130% better inference performance in the cloud and up to 12 times better performance on client devices. It also helps businesses save up to 55% on the total cost of ownership of their GenAI solutions.

“Bud Runtime is a game-changer for businesses looking to harness the power of generative AI,” said Jithin V.G, CEO of Bud Ecosystem Inc. “By providing an environment-agnostic platform that seamlessly integrates with diverse infrastructures—whether cloud, edge, or on-premises—Bud Runtime democratizes AI and empowers organizations to innovate without breaking the bank.”

Enterprise-Ready Features for Faster GenAI Adoption

Bud Runtime is engineered to accelerate the deployment of generative AI applications across a wide range of environments. It offers support for on-premises, cloud, and edge deployments, ensuring scalability and flexibility. Built-in features such as LLM Guardrails, model monitoring, and advanced observability help organizations maintain control over their GenAI applications while adhering to guidelines set by the White House and the EU AI framework. Additionally, Bud Runtime supports active prompt analysis and prompt optimization, further enhancing the overall efficiency and effectiveness of GenAI solutions.

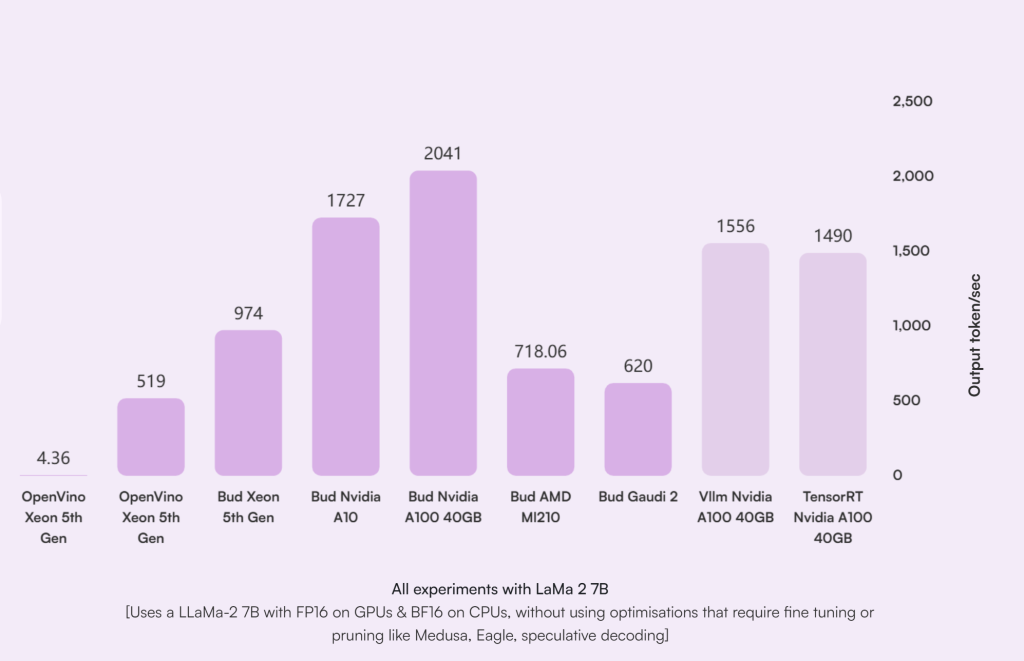

The solution is designed to help organizations move forward with their AI initiatives, regardless of their in-house expertise. By providing a unified set of APIs, Bud Runtime ensures portability across various hardware architectures, platforms, clouds, and edge environments. This consistency makes it easier for businesses to build and scale GenAI applications without compromising performance. The chart below illustrates the performance of the LLaMa model on different hardware, comparing results with and without Bud.

Bud Runtime offers significant performance gains, delivering a 60-200% increase in throughput on CPUs with accelerators. This optimization enables businesses to enhance their AI applications’ efficiency and responsiveness. In addition to improving performance, Bud Runtime helps organizations reduce costs, with potential savings of up to 55% on the total cost of ownership of their GenAI solutions.

Designed for versatility, Bud Runtime supports a wide range of deployments, including on-premises, cloud, and edge environments, ensuring scalability and flexibility for businesses of all sizes. The platform also includes enterprise-ready features such as built-in LLM guardrails, model monitoring, and advanced observability, allowing organizations to maintain control and visibility over their AI applications. Furthermore, Bud Runtime complies with key regulatory frameworks, including the White House and EU AI Guidelines, ensuring organizations can meet necessary standards. With features like active prompt analysis and optimization, Bud Runtime enhances model performance and helps businesses optimize their GenAI solutions for greater effectiveness.

As organizations continue to explore the potential of Generative AI, Bud Runtime offers a scalable, cost-effective solution that meets the diverse needs of enterprises while ensuring reliable and consistent performance across all devices.

.png)