Bud Ecosystem and Intel Corporation have embarked on a strategic partnership aimed at accelerating the adoption of Generative AI (GenAI) across industries. The companies have signed a Memorandum of Understanding (MoU) to integrate Bud Ecosystem’s GenAI software stack with Intel processors, enabling cost-effective GenAI deployment for enterprises. Despite the transformative potential of GenAI, its enterprise…

Bud Ecosystem is excited to announce a strategic partnership with Infosys, a global leader in technology services and consulting. This collaboration aims to accelerate the enterprise adoption of Generative AI across industries by addressing key challenges that organizations face in the process. With the rising interest in harnessing the potential of GenAI, many enterprises encounter…

In a joint effort to enhance natural language processing for Hindi and its dialects, Intel, Tech Mahindra, and Bud Ecosystem have collaborated on Project Indus, a new open-source large language model (LLM). The project focuses on addressing the challenges of natural language generation and processing for India’s diverse linguistic landscape. The benchmark study of Project…

We’re excited to announce that Bud Ecosystem has joined the Open Platform for Enterprise AI (OPEA), a collaborative initiative aimed at advancing open, enterprise-ready generative AI solutions. OPEA was founded in early 2024 by Intel and officially contributed to the Linux Foundation’s LF AI & Data initiative. It was publicly launched on April 16, 2024, […]

Bud Ecosystem, Intel, and Microsoft have entered into a Memorandum of Understanding (MoU) to enable cost-effective Generative AI deployments accessible to everyone through the Azure cloud. This partnership will leverage Intel’s 5th Generation Xeon processors along with Bud Ecosystem’s innovative inference optimization engine, Bud Runtime, to lower the total cost of ownership (TCO) for GenAI […]

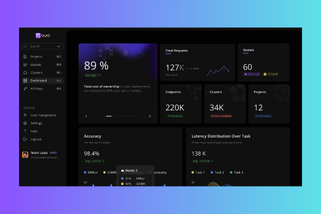

Abstract : While working on projects that involve inference with embedding models, inference error rates were a major headache for us. We tested a few inference engines, like HuggingFace’s Text Embeddings Inference (TEI), which had a 94% error rate and Infinity Inference Engine, with a 37% error rate, both on higher context lengths (8000 tokens). […]

Bud Ecosystem is proud to announce that its flagship offering, Bud Runtime, has officially achieved Red Hat OpenShift certification. This milestone signifies Bud Runtime’s compatibility and performance optimization across Red Hat OpenShift environments, providing enterprises with cutting-edge AI serving and inference capabilities that deliver unmatched efficiency and scalability. Bud Runtime is a powerful, generative AI […]

Bud Ecosystem has unveiled Bud Runtime, a Generative AI serving and inference optimization software stack designed to deliver high-performance results across diverse hardware and operating systems. With its flexible deployment capabilities, Bud Runtime enables businesses to deploy production-ready Generative AI solutions on CPUs, HPUs, NPUs, and GPUs. As the demand for generative AI grows, businesses […]

Bud Ecosystem is excited to announce an enhancement to its hybrid Large Language Model (LLM) inference technology, now powered by Intel processors. By integrating domain-specific Small Language Models (SLMs) optimized for Intel CPUs alongside cloud-based LLMs, Bud’s solution offers a scalable and cost-effective approach for enterprise GenAI adoption. This innovative hybrid inference model offers a […]

Bud Ecosystem is thrilled to announce the release of five state-of-the-art open-source code models, meticulously fine-tuned for code generation tasks. These models represent significant improvements in performance, surpassing existing competitors and setting new benchmarks in the field. Among the releases, the Bud Code Millennials 34B model stands out with an impressive HumanEval pass rate of […]

Bud Ecosystem has open-sourced their fifth Large Language Model (LLM) GenZ 70B, an instruction-fine-tuned model offering a commercial license. The model is primarily fine-tuned for enhanced reasoning, roleplaying, and writing capabilities. In our initial evaluations, the model exhibited outstanding results. The model scored 70.32 on the MMLU benchmark, which is higher than LLaMA2 70B’s score of […]

Bud Ecosystem has announced the launch of SQL Millennials, an open source Text-to-SQL translation model designed to make data retrieval and analysis accessible to users without technical SQL knowledge. The new models, sql-millennials-7b and sql-millennials-13b, are built on the Mistral 7B and CodeLLaMa 13B frameworks and have been fine-tuned using a diverse dataset of 100,000 […]