We’re excited to announce that Bud Ecosystem has joined the Open Platform for Enterprise AI (OPEA), a collaborative initiative aimed at advancing open, enterprise-ready generative AI solutions. OPEA was founded in early 2024 by Intel and officially contributed to the Linux Foundation’s LF AI & Data initiative. It was publicly launched on April 16, 2024, with partners including Red Hat, Hugging Face, and MariaDB.

Today, OPEA hosts 26 open-source projects, including microservice-level GenAI components, GenAI examples, enterprise RAG applications, GenAI Studio, and more. This partnership reflects our shared commitment to accelerating the adoption of practical, scalable, and secure GenAI technologies through open standards and composable systems.

Bud Ecosystem’s alignment with OPEA reflects a shared vision: to make generative AI not only more powerful, but more open and widely usable. Ditto PS, Chief Technology Officer at Bud Ecosystem, emphasized this synergy, saying, “Bud brings ease of adoption and use, scalability, production readiness, and stability, while Opea offers a plethora of useful, battle-tested GenAI components that can be used to build the majority of industry-required GenAI use cases. This ensures easy adoption and scalability, effectively commoditizing GenAI at scale.”

Bud Ecosystem, as an AI research and engineering company, has already open-sourced over 20 models and released several tools and frameworks that simplify GenAI adoption for developers and enterprises alike. Bud is actively working with global system integrators such as Infosys, Accenture, and LTIMindtree to help organizations worldwide embrace generative AI with confidence and clarity. Partnering with OPEA allows Bud to further strengthen its commitment to open-source AI and empower enterprises with more accessible and viable GenAI adoption paths.

OPEA provides a comprehensive framework that simplifies the creation and deployment of enterprise-grade generative AI systems. At its core, OPEA is designed to integrate cutting-edge innovations across the AI ecosystem while prioritizing the practical requirements of enterprise adoption—performance, cost-effectiveness, security, and trust. OPEA equips businesses with modular building blocks, architectural blueprints, and assessment tools to help bring production-ready AI applications to life more efficiently.

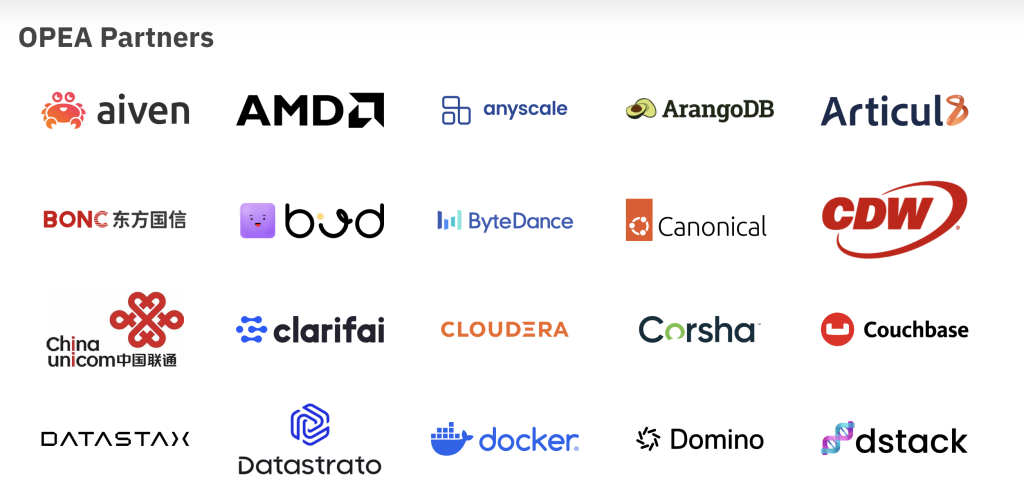

With Bud joining the initiative, OPEA now counts 56 partners in its growing ecosystem, including industry leaders such as Intel, AMD, Infosys, NetApp, HuggingFace, MariaDB, Red Hat, ZTE, VMware, Docker, and many others. This diverse and expanding network underscores OPEA’s momentum in uniting key players across the AI and enterprise technology landscape to drive innovation through open collaboration.

As a new member of OPEA, Bud plans to contribute a wide range of technologies and enhancements to the platform. These include the development of intelligent agents and tools, service runtime components, and an advanced inference engine. Bud will also bring performance optimizations specifically tuned for hardware such as Gaudi 2 and Intel Xeon processors. The team is also working on custom operating system distributions designed for different hardware environments, as well as full end-to-end application frameworks that help enterprises build and deploy GenAI applications with greater speed and efficiency.

The partnership between Bud Ecosystem and OPEA marks a meaningful step toward mainstream enterprise adoption of generative AI, built on open standards, modular architectures, and shared innovation.

.png)