Large Language Models, with their increased parameter sizes, often achieve higher accuracy and better performance across a variety of tasks. However, this increased performance comes with a significant trade-off: inference, or the process of making predictions, becomes slower and more resource-intensive. For many practical applications, the time and computational resources required to get predictions from these models can be a substantial bottleneck.

Enter Early-Exit Neural Networks (EENNs) is an approach designed to tackle this very challenge. EENNs introduce a clever mechanism that allows the model to ‘exit’ and produce a prediction before it has fully processed all the layers of the network. Essentially, they give the model the ability to make a decision at intermediate stages of computation. This can lead to significant reductions in inference time and resource consumption, as the model doesn’t always need to go through every single layer to make a prediction.

However, the key challenge with EENNs is determining when it is appropriate to exit the network early. If the model exits too soon, there’s a risk that the prediction might not be accurate enough, leading to suboptimal performance. Therefore, the critical question becomes: when is it ‘safe’ for an EENN to go ‘fast’ without compromising the quality of the predictions

Risks Associated with Early Exiting

When a model exits early, there are risks that its predictions might not be as accurate or reliable as when it completes all its processing steps. Two main types of risks are Performance Gap Risk and Consistency Risk.

Performance Gap Risk: Performance Gap Risk helps us understand how reliable early exit predictions are compared to the full model predictions. If the difference (or gap) is too large, it suggests that early exits might not be as reliable.

Consistency Risk: Consistency Risk helps ensure that early exits are making predictions that are consistent with those made when the model runs to completion. It helps in maintaining the reliability of predictions even when the model exits early.

Managing these risks is crucial in real-world applications where early exiting is used, especially in scenarios that require quick decisions or operate under resource constraints.

Introducing Risk Control in EENNs

To mitigate the risks associated with early exiting, implementing robust risk control frameworks could provide an effective solution. By applying risk control mechanisms in EENNs, we can develop a method to adapt the exiting mechanism in a way that balances speed and accuracy effectively.

In the research paper, the authors explore risk control for Early-Exit Neural Networks (EENNs), the authors demonstrated its effectiveness across a diverse range of tasks, including image classification, semantic segmentation, language modelling, and image generation with diffusion models.

- First, the researchers have refined Early-Exit Neural Networks (EENNs) by integrating risk control, making it easier to use these networks effectively. They’ve linked risk control directly with the process of making early decisions, ensuring that the model works accurately and efficiently.

- They’ve also introduced new methods for assessing when it’s safe to make these early decisions. Unlike previous approaches that only looked at the accuracy of predictions, their methods also consider the uncertainty behind those predictions. This improvement means the model can make better decisions about when to take shortcuts.

- Their advancements are not limited to one type of task. The team has improved language models by allowing them to make early exits more confidently, leading to better efficiency. This shows that risk control can enhance performance across different applications.

- Additionally, they have applied this approach to new areas like image classification, semantic segmentation, and image generation. This broader application of early exits with risk control opens up new opportunities for making predictions faster and using resources more efficiently in various machine learning tasks.

Overall, their work demonstrates that integrating risk control with early-exit neural networks can lead to substantial improvements in both efficiency and performance. As advancements in LLM inference optimization continue, this approach offers a promising path toward achieving faster, more resource-efficient models without compromising accuracy.

How Early-Exit Neural Networks Work?

A neural network has several computational layers. Traditional neural networks process every input through all their layers to make a decision, which can be slow and resource-intensive. Early-Exit Neural Networks (EENNs) improve this by allowing the network to make a decision partway through the process if it feels confident enough.

Think of an EENN like a quality control checkpoint in a factory. As an item moves down the production line, it passes through several checkpoints. If the item meets quality standards at an earlier checkpoint, it can skip further checks and be approved sooner.

In the case of EENNs, these “checkpoints” are different layers of the network. Each layer can make a decision and provide a confidence score about its prediction. If the network is confident enough about its prediction at an earlier layer, it will make a decision and finish the process early, saving time and computational resources. Key features of EENNs are;

- Dynamic Processing: Instead of always using all layers for every input, EENNs adjust the number of layers used based on how complex or “difficult” the input is.

- Probabilistic Predictions: Each layer in the network provides a prediction and a confidence score. The confidence score tells us how sure the network is about its prediction at that layer.

- Early Decision Making: At test time, if the confidence score from an earlier layer is high enough, the network will make its prediction and exit early. This is done using a predefined confidence threshold.

- Efficiency Gains: By allowing the network to make decisions earlier when it’s confident, EENNs reduce the amount of computation needed and speed up the process.

In EENNs, a key parameter known as the threshold (denoted as λ) helps balance accuracy and efficiency. Lower threshold values enable the network to make decisions earlier, speeding up the process and reducing resource usage, but this often sacrifices accuracy, as the network might not be fully confident in its early predictions. Conversely, higher threshold values necessitate greater confidence before making a decision, which typically results in more accurate predictions but also makes the process slower and more resource-intensive.

To set the right threshold, the performance and efficiency of the network are usually tested on separate, hold-out data. This helps in choosing a threshold that fits within the user’s computational budget while still meeting performance goals. Often, the network’s confidence in its predictions is used as a guide for when to make an early exit. However, this method can sometimes be unreliable, as the confidence scores might not always accurately reflect the true quality of the predictions.

EENNs as Risk-Controlling Predictors

In their research, the authors outline a method for using EENNs as risk-controlling predictors and then motivate and formalise how various risk control frameworks can be adapted to the early-exit setting.

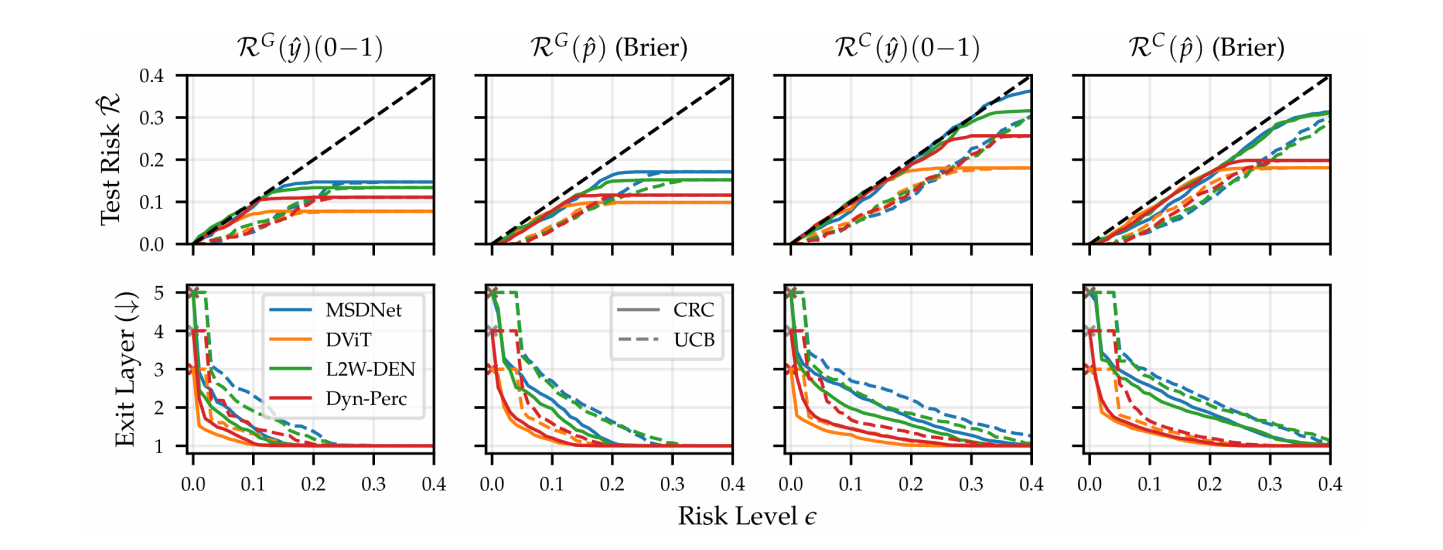

- The authors suggest using Performance Gap Risk and Consistency Risks for controlling the quality of both model predictions and predictive distributions.

- The research team uses the Brier score, a scoring rule that is well-suited for assessing probabilistic forecasts. Its mathematical formulation is particularly favourable for risk control compared to other widely used probabilistic metrics.

- The research team is proposing an improvement to a method called Conformal Risk Control (CRC) by adapting it for a situation where predictions can stop early.

- To provide a stronger guarantee for risk control, the research team aims to ensure that the risk is controlled with high probability for any given calibration set. They achieve this by using the Upper Confidence Bound (UCB) method, as outlined by Bates et al.

The key conclusions of the research are as follows:

The research focuses on how to select a safe and effective way to use early-exit mechanisms in neural networks. They propose a method to balance the efficiency of these networks with their performance through risk control.

- Empirical Validation: The proposed method has been tested on various tasks and has shown improvements over previous approaches, particularly in early-exit language modelling.

- Limitation and Potential Improvements: The main limitation of their approach is that it uses a single, shared exit threshold for all cases. They suggest that using multiple thresholds could further enhance efficiency.

As machine learning models continue to grow in complexity, the ability to make predictions faster while maintaining high performance will be crucial. Early-exit neural networks, combined with risk control, offer a viable path to achieving this balance. For developers and researchers working with large-scale models, exploring these approaches can lead to more efficient and effective solutions, ultimately advancing the field of machine learning and its practical applications.

.png)