Overview

Customer support services are increasingly challenged to meet rising consumer expectations for quick, accurate, and personalised responses. Traditional systems often fall short, particularly with complex product queries, due to limitations in context understanding, slow response times, and poor accuracy with legacy technologies.

To address these issues, Bud Ecosystem, FoundationFlow, and Intel developed an advanced Retrieval-Augmented Generation (RAG)-based chatbot solution powered by Intel® Xeon® processors. This solution enhances accuracy and efficiency in handling product catalogue inquiries. It integrates external knowledge sources, provides direct access to product information, and features improved table parsing capabilities. The BudServe engine further leverages Intel® Xeon® processors for superior performance. This case study explores the industry challenges the solution addresses, how the solution works, and the key evaluation outcomes.

The limitations of traditional RAG systems for chatbot-driven customer support

Current chatbot solutions often rely on traditional Retrieval-Augmented Generation (RAG) systems for customer support. While these systems automate responses, they have several significant limitations that impact their effectiveness:

- Limited Contextual Understanding: Traditional RAG systems struggle to grasp the broader context of user queries, leading to responses that can be irrelevant or incomplete. Even if the system retrieves seemingly relevant documents, it may still fail to provide accurate answers due to inadequate contextual comprehension.

- Slow Response Times: On infrastructure based on Intel® Xeon® processors, traditional RAG systems are hampered by slow token generation and processing delays. This sluggish performance results in longer wait times for responses, which can frustrate customers accustomed to quick service and negatively affect their perception of support quality.

- Poor Table Comprehension: Many customer queries involve detailed product specifications presented in tables. Traditional RAG systems often struggle to effectively parse and interpret this tabular data, leading to inaccurate or incomplete responses for queries that require precise information.

These limitations hinder the overall effectiveness and efficiency of traditional RAG-based chatbot solutions.

The Solution

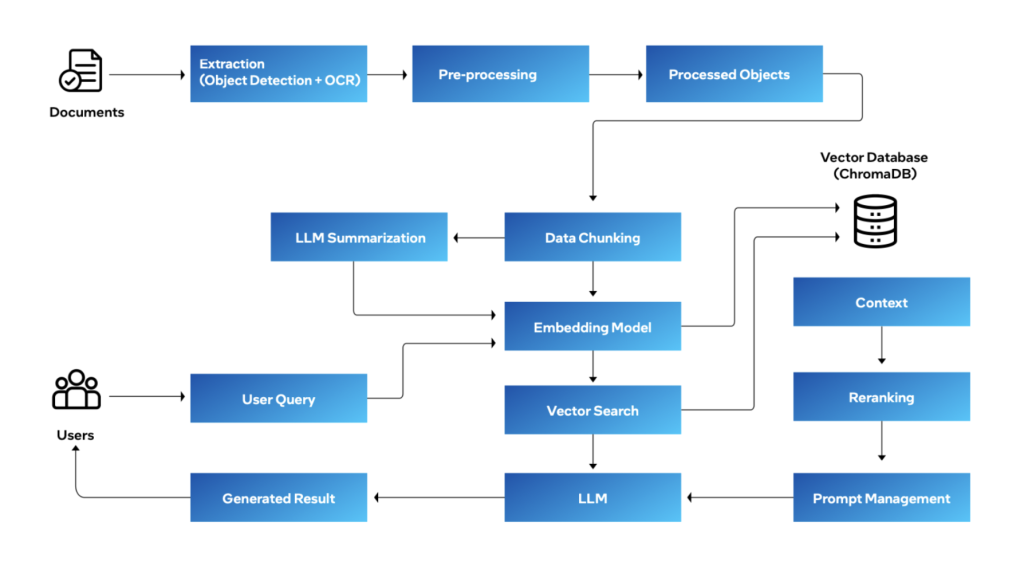

Bud Ecosystem, FoundationFlow, and Intel partnered to develop a custom Retrieval-Augmented Generation (RAG) solution aimed at enhancing context understanding, improving response times and accuracy, and creating a more efficient customer support system. Leveraging FoundationFlow’s Co-pilot Builder module and Intel® Xeon® processors, the custom chatbot integrates seamlessly with external knowledge sources to provide precise and informative responses to product-related inquiries. Key features of this RAG solution include:

- Direct Product Catalogue Access:The system bypasses the need for manual data entry or separate database integrations by directly accessing extensive product catalogues and documents. This ensures the chatbot always has up-to-date product specifications and details, enhancing response accuracy.

- Enhanced Table Parsing:The solution includes advanced capabilities for parsing and interpreting tables within product catalogues. This allows the chatbot to accurately address complex queries about product features and configurations, overcoming a significant limitation of traditional LLM systems.

- ptimization for Intel® Architecture:The RAG system is fine-tuned for Intel® Xeon® processors, leveraging their high-performance processing and scalability. This optimization results in fast response times and smooth operation, even with a high volume of inquiries and complex product data, while also ensuring efficient implementation across enterprise organisations with optimised infrastructure costs.

BudEcosystem’s proprietary inference engine significantly boosts the performance of the overall stack, delivering faster response times and superior performance even under high user loads. This engine not only supports less-compute devices but also provides performance comparable to GPU solutions. Bud’s Inference engine enabled the following;

- High Concurrency:The RAG system architecture is optimised for handling high concurrency, ensuring smooth operation even during peak usage. Rigorous testing has demonstrated the system’s capability to support over 100 concurrent users on a single 48-core server without noticeable performance degradation.

- Efficient Token Generation:The system produces an average output of 125 tokens per second, enhancing user experience by delivering faster responses to queries.

Overall, BudEcosystem’s inference engine ensures robust performance and efficiency, contributing to a seamless and responsive customer support experience. This custom RAG solution addresses critical limitations of traditional systems, offering a more effective and scalable approach to customer support.

Key Results

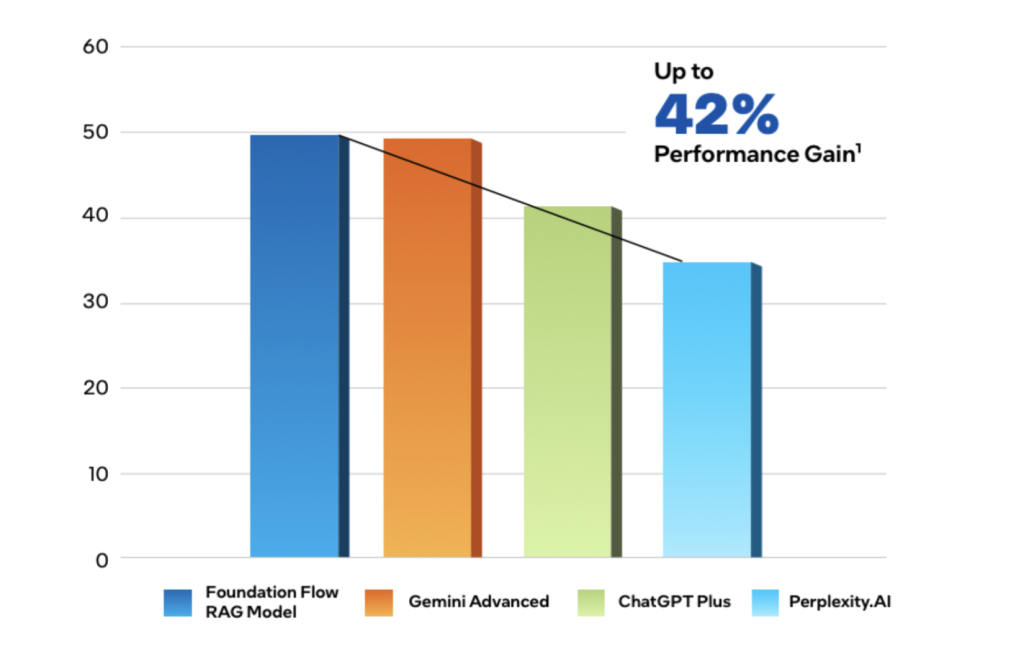

The chatbot solution has demonstrated superior performance compared to leading cloud-based solutions like Google Gemini Advanced, ChatGPT Plus, and Perplexity.

1. Performance Improvement

- Handling Product Catalogue Queries: There has been nearly a 60% improvement in handling product catalogue queries.

- Average Output: The system achieves an impressive average of 124.89 Transactions Per Second (TPS), indicating high efficiency and speed in processing a large number of transactions.

2. Impact on Customer Support:

- Efficiency and Accuracy: The RAG approach has significantly enhanced the chatbot’s ability to retrieve and generate accurate responses from a vast information repository, leading to improved customer support.

- Direct Product Catalog Access: The chatbot’s capability to access the most current and comprehensive product information directly from the catalog has sped up response times and ensured accuracy.

- Enhanced Table Parsing: Improved parsing of tabular data allows the chatbot to handle complex queries involving detailed product specifications more effectively.

Overall, these enhancements lead to a more streamlined customer support operation, reducing customer frustration and improving the overall experience.

.png)