Overview

This case study examines the inference performance of the Mistral 7B model, a large language model with 7.3 billion parameters, to assess its viability for production-ready Generative AI (GenAI) solutions. As many organisations struggle to move from pilot projects to full-scale deployments due to the high costs associated with GPU-based inference, the study benchmarks Mistral 7B using three inference engines: vLLM, TGI, and Bud Runtime. Key performance metrics, including input and output token throughput and execution time, highlight significant differences among the engines. Notably, Bud Runtime emerged as the most efficient, offering the highest throughput and lowest execution time, making it ideal for production environments. These insights emphasise the critical role of inference engine selection in optimising the deployment of LLMs like Mistral 7B, ultimately facilitating broader adoption of GenAI technologies.

LLM inference for production ready GenAI solutions

The advent of Generative AI (GenAI) technology has opened up new frontiers in various industries, offering unprecedented capabilities for natural language processing, content generation, and more. However, despite the promising potential of large language models (LLMs), the journey from pilot projects and proofs of concept (PoCs) to full-scale production deployment remains a significant challenge. A primary hurdle is the high cost associated with LLM inference, predominantly driven by the computational power required, which typically necessitates the use of Graphics Processing Units (GPUs).

The infrastructure required for GPU-based inference can be prohibitively expensive for many organisations, limiting the broader adoption of GenAI solutions. As a result, many enterprises find themselves stalled at the pilot stage, unable to justify the investment required for production-ready deployments. This situation underscores the need for cost-effective alternatives that can deliver comparable performance without the financial burden associated with GPU resources.

To facilitate enterprise-level adoption of GenAI technologies, it is essential to explore viable solutions for LLM inference on less costly hardware, such as Central Processing Units (CPUs). If CPU-based inference can achieve performance levels comparable to GPU-based solutions, it could significantly reduce operational costs and enable more organisations to deploy LLMs in a production environment. This case study aims to benchmark the inference performance of the Mistral 7B model on GPUs and assess its viability on CPUs, providing insights that could pave the way for more sustainable GenAI implementations.

Mistral 7B

Mistral 7B is a large language model featuring 7.3 billion parameters. It demonstrates exceptional performance across a variety of benchmarks, notably outperforming Llama 2 13B in all evaluated metrics and matching or exceeding the capabilities of Llama 1 34B in many areas. The model is particularly strong in code-related tasks, closely rivalling CodeLlama 7B while maintaining robust performance in English language tasks.

Key features of Mistral 7B include Grouped-Query Attention (GQA), an innovative mechanism that enhances inference speed, making the model more efficient for practical applications. Additionally, it employs Sliding Window Attention (SWA), a technique that enables the model to effectively process longer sequences while reducing computational costs. These features collectively contribute to Mistral 7B’s strong performance and suitability for a variety of tasks.

Mistral 7B’s performance is validated through a comprehensive evaluation pipeline, which encompasses a wide range of benchmarks categorised into themes such as commonsense reasoning, world knowledge, reading comprehension, mathematics, and coding. Its remarkable abilities in these areas position Mistral 7B as a leading choice for enterprises seeking powerful and efficient LLM solutions for diverse applications.

Benchmarking Mistral 7B Inference performance on GPUs

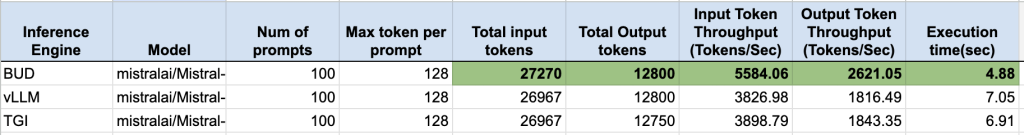

In our benchmarking experiment, we evaluated the inference performance of Mistral 7B using three prominent LLM inference engines: vLLM, TGI, and Bud Runtime. The experiments were conducted on an Nvidia A100 GPU with 80GB of memory, chosen for its advanced capabilities in handling large language models efficiently.

We focused on several key performance metrics throughout the evaluation, including total input tokens, total output tokens, input token throughput (measured in tokens per second), output token throughput (also measured in tokens per second), and overall execution time in seconds. These parameters provide a comprehensive view of the model’s performance and efficiency during inference.

The results from this benchmarking effort will highlight the strengths and weaknesses of each inference engine when running Mistral 7B (mistralai/Mistral-7B-Instruct-v0.3), allowing us to assess which engine delivers the best performance under the specific conditions of our test environment. This analysis is crucial for organisations considering the deployment of Mistral 7B in production, as it informs choices related to infrastructure and operational efficiency.

Key Results

The benchmarking results for Mistral 7B across the three inference engines—TGI, vLLM, and Bud Runtime—provide valuable insights into their respective performance capabilities and operational efficiencies.

- Bud Runtime emerged as the standout performer,achieving the highest input token throughput at 5,584.06 tokens/sec and an output token throughput of 2,621.05 tokens/sec. Its execution time of just 4.88 seconds demonstrates its efficiency, making it an ideal choice for scenarios requiring rapid response times and high throughput. This significant advantage in performance suggests that Bud Runtime may be particularly well-suited for production environments where scaling and responsiveness are critical.

- TGI and vLLM,while showing competent performance, fell short of Bud Runtime in both throughput and execution time. TGI recorded an input token throughput of 3,898.79 tokens/sec and an output token throughput of 1,843.35 tokens/sec, with an execution time of 6.91 seconds. vLLM, on the other hand, had slightly lower throughputs of 3,826.98 tokens/sec for input and 1,816.49 tokens/sec for output, and a comparable execution time of 7.05 seconds. These results indicate that while both engines can effectively run Mistral 7B, they do not match the efficiency and speed of Bud Runtime.

Overall, the analysis highlights the critical role that inference engine selection plays in maximising the performance of LLMs like Mistral 7B. Organisations aiming to deploy Mistral 7B in a production setting should consider these findings, as choosing Bud Runtime could lead to improved operational efficiency and better user experiences due to its superior performance metrics.

.png)