In the fast-moving world of Generative AI, where innovation often outpaces regulation, licensing has emerged as an increasingly critical—yet overlooked—challenge. Every AI model you use, whether open-source or proprietary, comes with its own set of licensing terms, permissions, and limitations. These licenses determine what you can do with a model, who can use it, how it can be deployed, and whether you’ll need to pay, credit the authors, or avoid commercial applications altogether.

At Bud, we’ve consistently focused on building infrastructure that makes working with Generative AI models more practical, secure, and developer-friendly. Today, we’re excited to share a small yet powerful feature we’ve added to the Bud Runtime: automated license agreement analysis.

This new capability helps you instantly understand the most important aspects of a model’s license without spending hours poring over complex legal text. It’s designed to make compliance simple, transparent, and actionable—especially for developers and enterprises who work with multiple models across varied projects.

The Problem: A Growing Maze of AI Model Licenses

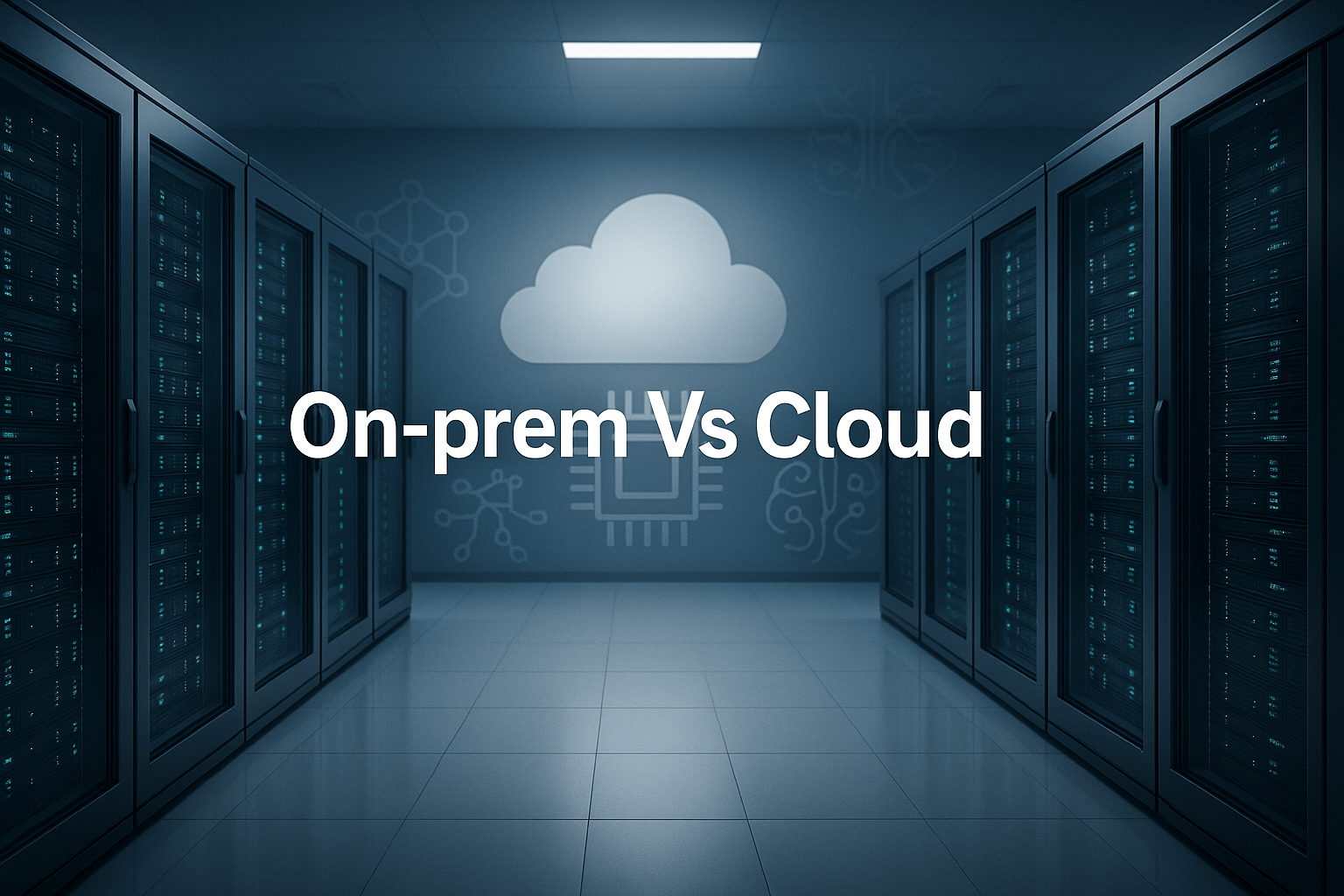

Just a few years ago, the licensing landscape for machine learning models was relatively simple. Most were either academic, under permissive licenses like MIT or Apache 2.0, or closed-source, with limited access.

But the explosion of open-source and commercial models—especially in the Generative AI space—has drastically changed this. Today, it’s not uncommon for developers to compare or use dozens of models like LLaMA, Mistral, Stable Diffusion, Mixtral, Gemma, or Claude—and every one of them comes with different licensing terms.

These licenses can vary in:

- Commercial vs non-commercial usage

- Attribution or citation requirements

- Royalty or revenue-sharing clauses

- Geographic restrictions

- Limitations based on use cases

- Redistribution or fine-tuning rules

If you’re an individual developer, this can be confusing and risky. If you’re part of a legal or compliance team at an enterprise, this becomes a bottleneck and a liability.

Automated License Analysis in Bud Runtime

The Bud Runtime now automatically scans and summarizes license agreements associated with each AI model you use. This summary includes:

- Plain-language explanation of key terms

- Highlights of critical clauses

- Visual indicators (✅ for favorable terms, ❌ for limitations or risks)

- Custom guidance based on your project’s context (e.g., commercial deployment)

This lets you make faster, safer decisions during model selection, integration, and deployment.

Here’s how it works:

- You load a model into Bud Runtime.

- The runtime detects the license file—from repositories, model cards, or custom paths.

- It parses and summarizes the license, identifying clauses under common categories (e.g., usage, modification, redistribution).

- It flags terms with visual markers:

- ✅ Green Tick: Clear, permissive, or industry-standard terms

- ❌ Red Cross: Risky or restrictive clauses that may impact deployment

- ✅ Green Tick: Clear, permissive, or industry-standard terms

- It provides contextual explanations so you understand what each term means and how it might affect your use case.

Let’s see how it works in the video below;

Why This Matters

Avoid Costly Mistakes: Using a model under a license that restricts commercial use, without realizing it, could expose your team to lawsuits or forced product changes. Our automated tool makes these restrictions visible from day one.

Accelerate Model Evaluation: Traditionally, evaluating licenses slows down your team—legal reviews, back-and-forths, and uncertainty. Now, developers can make informed choices quickly, reducing friction in experimentation and deployment.

Stay Audit-Ready: For enterprises operating under strict regulatory frameworks (e.g., in finance, healthcare, or government), demonstrating due diligence in license compliance is essential. Bud’s feature creates a traceable record of license evaluation and compliance.

Built for Developers, Trusted by Legal

We designed this feature to meet the needs of both technical users and legal stakeholders.

- For developers, it offers clarity without legalese.

- For legal/compliance teams, it offers structured summaries and risk assessments.

- For product leaders, it reduces uncertainty in roadmap planning and model adoption.

As open-source AI matures, the tools surrounding it need to mature too. Bud Runtime’s license analysis feature is part of a broader movement toward responsible AI development. Just like we automate testing, security scanning, and model benchmarking, we must also automate compliance. We believe that by helping teams adopt AI more responsibly and efficiently, we’re not just solving a usability issue—we’re helping the ecosystem grow sustainably. The feature is live now in the latest version of Bud Runtime. Simply load your models as usual, and the license analysis will appear automatically in your dashboard or CLI summary.

Final Thoughts

Legal complexity shouldn’t be a blocker to innovation. By automating license analysis, Bud Runtime helps you focus on building great AI-powered products—while staying compliant and confident. This is just one of many small, smart features we’re building to make the future of Generative AI more usable, scalable, and safe.

Have feedback? We’d love to hear how this feature works for you—or what we should build next.

.png)