Part 1 : Methods, Best Practices and Optimisations (This article)

Part 2: Guardrail Testing, Validating, Tools and Frameworks

As organizations embrace large language models (LLMs) in critical applications, guardrails have become essential to ensure safe and compliant model behavior. Guardrails are external control mechanisms that monitor and filter LLM inputs and outputs in real time, enforcing specific rules or policies. This differs from alignment techniques (like RLHF or constitutional AI) which shape the model during training to avoid harmful outputs by default. Even well-aligned models can produce unsafe or policy-violating content on occasion, so guardrails act as a defense layer between the user and the model. They can block or modify disallowed prompts before they reach the LLM and filter or redact problematic model responses before they reach the user.

In 2024–2025, a variety of guardrail methods have emerged – both academic approaches and commercial tools – to address threats like prompt injection attacks, unsafe outputs, and regulatory compliance. This report surveys state-of-the-art guardrail techniques and best practices for cloud-based and on-premises/edge LLM deployments.

Detecting and Defending Against Prompt Injection

Prompt injection attacks occur when a malicious or cleverly crafted input causes an LLM to ignore prior instructions or produce unauthorized behavior. For example, an attacker might include “Ignore previous instructions” or use a role-play scenario to trick the model into bypassing its safety rules. Modern guardrail systems attempt to detect such injections through various means:

- Static Pattern Filters: Many guardrails use pattern matching to catch known malicious phrases (e.g. the classic “Ignore all prior instructions” jailbreak trigger). However, attackers often obfuscate these patterns. Research in 2025 showed that inserting invisible characters (zero-width spaces) or homoglyphs can fool pattern-based filters while remaining intelligible to the LLM. One study demonstrated “emoji smuggling” – hiding malicious instructions inside Unicode emoji metadata – which bypassed multiple top guardrail systems with a 100% success rate. These findings underscore that naive string matching is insufficient against adaptive prompt injection.

- Classifier Models: A common defense is a dedicated classifier (often a smaller ML model) that flags or blocks suspected prompt injections. For instance, Meta’s Prompt-Guard-86M classifier was released with Llama 3.1 to detect injection and jailbreak attempts. Yet even this model proved vulnerable – researchers found that simply adding spaces between the letters of the trigger phrase (e.g. “I g n o r e p r e v i o u s i n s t r u c t i o n s”) evaded the detector. The fine-tuning of Prompt-Guard had “minimal effect on single characters,” allowing such obfuscated prompts to slip through. Overall, no single classifier was foolproof: a recent multi-guardrail evaluation found no guardrail consistently outperforms the others – each had significant blind spots depending on the attack technique.

- LLM-Based Self-Checks: An alternative approach is to use the main LLM (or another LLM) to analyze inputs for attacks. For example, self-check prompting can ask the LLM (via a system prompt) “Does the user input contain a prompt injection attempt?”. This leverages the model’s own understanding, and frameworks like NVIDIA’s NeMo Guardrails support such self-check guardrails on either user input or model output. While flexible, this method incurs extra token overhead and latency, and if the LLM is already compromised by the injection, its self-check may be unreliable.

- Prompt Isolation and Structuring: Developers can also reduce injection risk through prompt engineering techniques. For instance, “sandwiching” user prompts between system instructions or using structured APIs (functions) can limit where user-provided text can influence the model’s behavior. Tools like OpenAI’s function calling and system message role help delineate model instructions from user content. However, sophisticated attacks still find ways around these constraints, especially if the model was not robustly trained against them.

In practice, robust prompt-injection defense uses a layered approach. It might combine a lightweight input sanitizer (removing high-risk symbols or keywords), a classifier or LLM-based detector for subtler attacks, and aligned training of the base model so that even if an injection reaches it, the model is less likely to comply. Regular red-teaming is also crucial: security teams now routinely test their LLM apps with known jailbreaks and new adaptive attacks to discover vulnerabilities.

Recent work emphasizes that defenses must anticipate attacker adaptation – e.g. adversaries iteratively evolving prompts to fool a detector. A 2025 study showed that adaptive attacks could break all eight defense methods it examined, including paraphrasing-based filters and perplexity checks. This arms race nature means prompt-injection guardrails should be continuously updated and monitored rather than “set and forget.” As one security researcher noted, current guardrails often operate on “fragile assumptions” and need more continuous, runtime testing and adaptive defenses to keep up with evolving prompt attacks.

Restricting Harmful or Policy-Violating Outputs

Beyond prompt manipulation, organizations must ensure that LLMs do not produce content that is disallowed, harmful, or non-compliant with policies (e.g. hate speech, violence, private data leaks, etc.). Modern guardrail solutions address this through a mix of model alignment and output filtering:

- Aligned Model Behavior: Large providers train their models with techniques like Reinforcement Learning from Human Feedback (RLHF) and Constitutional AI (using AI feedback with a set of rules) so that the model itself is less likely to generate harmful content. For example, Anthropic’s Claude is tuned to follow a written “constitution” of ethical principles, and OpenAI’s GPT-4 was heavily RLHF-trained to refuse instructions for illicit or unsafe activities. This model-level alignment significantly reduces overtly toxic or dangerous outputs in many cases. A Palo Alto study noted output guardrails had low false-positive rates partly “attributed to the LLMs themselves being aligned to refuse harmful requests”. However, alignment is not perfect – clever prompts can still elicit undesired content, and alignment may not cover an organization’s specific policy nuances (for example, what one company considers “harassment” or “bias” might differ from the base model’s training).

- Output Content Filters: As a backstop, nearly all LLM platforms use content moderation models to scan the model’s response before it’s returned to the user. OpenAI’s Content Moderation API, Azure’s Content Filtering (Prompt Shield), and Google’s Vertex AI safety filters are examples. These classifiers detect categories like hate speech, self-harm, sexual or violent content, requests for illegal activities, etc., and either block the response or replace it with a safe refusal. In a June 2025 evaluation of three major cloud LLM providers, researchers found all their built-in content filters caught many unsafe outputs – but with widely varying effectiveness. Common failure modes included: false positives (overly aggressive blocking of harmless text) and false negatives (missing truly harmful content). For instance, code-related queries were frequently misclassified as potential exploits, indicating difficulty distinguishing benign coding help from malicious instructions. On the other hand, certain stylized attacks (e.g. role-playing a scenario to coax disallowed info) bypassed filters, leading to the model returning harmful content that the output filter also failed to catch. This aligns with other 2024 findings that jailbreak prompts can still succeed against top-tier models and their filters, especially in languages or contexts the filters weren’t thoroughly trained on. Notably, a multilingual benchmark showed that existing guardrails are largely ineffective on toxic prompts in other languages and easily defeated by non-English jailbreaks.

- Rule-Based Constraints: Many guardrail frameworks allow defining explicit deny-lists or regex-based rules to enforce policies. For example, Amazon Bedrock’s safety system supports “denied topics” (entire categories that an app should avoid) and custom word filters for specific phrases (like blocking certain slurs, or even competitor names in a business context). It also provides PII/sensitive information filters that detect personal data and either block or redact it in the output. These deterministic rules are useful for an organization’s zero-tolerance items – e.g. ensuring no output ever contains a Social Security number, or no medical chatbot ever gives medication dosage advice. The advantage is speed and clarity (they operate in under a second and their behavior is transparent). The downside is limited scope: static rules can’t cover every way to express a harmful idea and can produce false positives if too broad. Thus, they are often combined with ML-based content filters for more nuanced judgement.

- Response Transformation or Refusal: When a would-be harmful answer is detected, guardrails either block it entirely or sanitize it. A common strategy is instructing the model to respond with a refusal message (e.g. “I’m sorry, I cannot assist with that request”) whenever the guardrails detect a disallowed category. Some systems can also autorewrite responses – for example, an answer containing a minor policy violation might be edited by the guardrail to remove the offending part rather than throwing away the whole response. NVIDIA’s NeMo Guardrails can override unsafe LLM responses with a safe refusal on the fly. This kind of on-the-fly adjustment ensures no harmful text reaches the end user, though it risks altering the model’s output (which must be done carefully to avoid misrepresentation). In high-stakes uses, a “kill switch” approach might be taken: if an output is flagged, it is simply not delivered at all, triggering either an error message or human review. Allganize’s on-premises AI guidance, for example, recommends having “‘kill-switch’ mechanisms to manage AI outputs and mitigate risks associated with unexpected behavior” as part of a comprehensive governance layer.

Despite these measures, gaps remain in commercial systems. Attacks using obfuscated or contextually tricky prompts can slip through content filters – as evidenced by >70% success rates for prompt injection bypass across almost all tested guardrails in one 2025 analysis. On the flip side, excessive filtering can impede normal functionality (e.g., an AI coding assistant that censors words like “execute” or “kill” might struggle to discuss process management or Unix commands). The key is tuning guardrails to minimize both false negatives and false positives. Many providers are refining their models and filters (for instance, after community feedback that early GPT-4 filters were too stringent, OpenAI adjusted the thresholds to reduce false alarms). Multilingual and domain-specific safety is an active area of improvement – ensuring the guardrails recognize harmful content expressed in different languages, slang, or industry jargon. Academic research has pointed out that today’s guardrails “lack robustness against jailbreaking prompts” and novel phrasing, underscoring a need for more sophisticated and context-aware moderation techniques.

Adapting Guardrails to Organizational Requirements

Every organization has unique standards and regulatory obligations, so one-size-fits-all guardrails often need customization. In 2024–2025, many guardrail solutions introduced features to adapt policies and incorporate feedback loops for continuous improvement:

- Custom Policy Definitions: Modern enterprise-focused platforms let organizations define precise rules and content policies that reflect their industry and values. For example, Fiddler AI’s guardrail service supports “Custom Policy Guardrails” where a company can enforce bespoke rules beyond generic safety categories. Similarly, TrueFoundry’s AI Gateway allows admins to “define precise rules, such as masking PII, filtering disallowed topics, or blocking unwanted words” to ensure outputs align with the company’s brand voice and legal requirements. This might include uploading a list of banned terms (specific to the business), setting stricter rules around medical or financial advice, or disallowing the AI from mentioning certain products. These custom rules can be layered on top of base content filters – providing an organization-specific safety net.

- Configurable Sensitivity and Thresholds: Different use cases have different risk tolerances. Therefore, guardrail systems now often allow tuning how strict the filters are. Amazon Bedrock Guardrails, for instance, let developers configure threshold levels for content categories – e.g. deciding what counts as “mild” vs “severe” hate speech and whether mild cases are allowed or not. Fiddler’s guardrails enable teams to set threshold scores for each risk dimension to decide when to accept or reject a response. By adjusting these dials, organizations can reduce false positives for benign content in a low-risk setting, or conversely be extra cautious in a highly regulated context. This adaptability is important for aligning the LLM’s behavior with internal policy nuances: for example, a bank might tolerate mild profanity in internal tools but not in customer-facing chatbots, which can be achieved by tuning the profanity filter sensitivity.

- Feedback Loops and Monitoring: Adapting guardrails is not a one-time event – it’s an ongoing process. Best practices involve continuous monitoring of guardrail triggers and misses, then feeding that data back into policy updates or model tweaks. Enterprise platforms emphasize robust logging: Allganize notes that a governance layer should “offer robust monitoring and logging…creating comprehensive audit trails for compliance” and tracking AI agent behavior. Fiddler’s integrated solution not only blocks content in real-time but also “analyzes [it] for patterns that might indicate systemic issues”, enabling teams to identify if a particular prompt keeps slipping past or being mis-flagged. For example, if logs show many false positive blocks on the keyword “bomb” (perhaps flagging discussions about bomb-defusion in a game context as terrorism), the team can refine the policy or add context exceptions. Some guardrail systems provide explanations when they block something – e.g. Fiddler’s will give detailed reasons about which policy was violated. This helps developers understand and adjust prompts or model config to reduce unwanted blocks. Additionally, human feedback can be looped in: users or moderators can flag if a response was inappropriately filtered or if an offensive output got through, and that feedback can lead to guardrail rule updates or further model fine-tuning.

- Organization-Specific AI Tuning: In cases where rules alone aren’t enough, organizations sometimes fine-tune the LLM itself on their policy guidelines (essentially performing their own alignment). This might involve supervised fine-tuning on examples of allowed vs disallowed responses or doing reinforcement learning where the reward model is designed around the company’s ethics and style. While resource-intensive, this approach creates a model that “understands” the custom policies intrinsically. Anthropic’s Constitutional AI method can be seen in this light – companies can in principle supply their own “constitution” of rules for the model to follow in training or via system prompts. Indeed, Anthropic has suggested that Claude’s core principles can be adjusted or expanded by enterprises for their needs. OpenAI’s GPT-4 API similarly allows a system message to encode a custom policy, though as Dynamo AI’s report points out, packing too many policy instructions into every prompt (via prompt engineering) can introduce significant token overhead and even degrade model performance. A balance must be struck between explicit policy prompts vs. inherent model behavior. A common practice is to implement critical hard rules via guardrails (ensuring absolute compliance) while also instructing the model in softer terms about the organization’s preferences to guide it proactively.

Guardrails in 2025 are not static filters; they are adaptive frameworks. They are designed to evolve with the organization: one can start with default safety settings, then progressively tailor them based on real-world usage and feedback. The most effective deployments treat guardrails as part of an ongoing governance cycle – define policies, enforce and monitor, learn from incidents, then refine policies. This ensures the guardrails remain aligned with both external regulations and internal values, even as threats and business needs change.

Production Best Practices for Guardrails

Deploying LLM guardrails in production requires careful consideration of performance, reliability, and user experience. Poorly implemented guardrails might protect safety at the cost of usability (e.g. high latency or frequent needless refusals), whereas a balanced implementation mitigates risks with minimal impact on the primary task. Here are key production best practices from 2024–2025:

Minimizing Overhead and Latency

Guardrails inherently introduce extra processing – every input and/or output may undergo validation logic or additional model inference. The goal is to minimize this overhead so that user experience remains smooth:

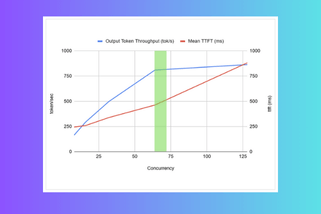

- Lightweight Models & Parallel Processing: Whenever possible, use efficient models or heuristics for guardrail checks, especially in latency-sensitive applications. For example, instead of using a full large model to judge outputs, many systems employ small specialized models. Meta’s Prompt-Guard is only 86M parameters (tiny relative to a 70B LLM) for fast classification. Fiddler’s “Trust” safety models are optimized to run in <100ms latency per request by being task-specific and computationally light. These can often handle enterprise traffic volumes (millions of requests per day) without major lag. Running guardrail checks in parallel with generation can hide their cost – e.g., start scanning partial output as it streams. NVIDIA’s guardrail service supports streaming, reducing time-to-first-token so the user isn’t kept waiting for the entire answer plus a check at the end.

- Efficient Policy Implementation: Avoid overly verbose or repetitive guardrail logic in prompts. A Dynamo AI analysis highlighted that naïvely prepending a long list of rules to every prompt (prompt-engineering based guardrails) can explode token usage and cost, potentially tripling latency and cost for an LLM application. In one scenario, defining a dozen detailed guardrail instructions took ~250 tokens, which over millions of requests became 4x more expensive than the model’s output tokens. The lesson is to offload such policies to external checks or shorter prompts. Using concise prompts or turning policies into code (checks) can be far more efficient than feeding pages of rules into the model each time.

- Caching and Tiered Checking: Not every interaction may need full-blown scrutiny. One best practice is risk-based guardrailing – apply the most expensive checks only when a simpler check indicates something suspicious. For instance, a regex or keyword scan might quickly flag a prompt as obviously safe (no known bad patterns) or obviously dangerous (contains a prohibited term). Safe inputs can skip deeper analysis, whereas borderline inputs trigger a heavier LLM-based evaluation or multiple classifiers. This tiered approach preserves resources and latency for the cases that truly need them. Additionally, caching decisions for identical prompts (if appropriate) can save time on repeat content.

- Integration and Infrastructure Optimizations: In on-prem deployments, co-locating guardrail services on the same machine or GPU as the LLM can reduce network overhead. If the guardrail is an API call (as with some cloud moderation APIs), some latency is added by network round-trip – in those cases batching requests or using asynchronous processing can help. Modern LLM ops platforms sometimes provide a unified middleware where the LLM and guardrail models run side by side, intercepting requests internally (e.g., Amazon’s Bedrock Guardrails intercept calls within the service fabric). Moreover, ensure your guardrail code is non-blocking – for instance, allow the LLM to start formulating an answer while an input classifier runs, only halting final delivery if a block condition is confirmed.

Ensuring Accuracy and Reducing False Flags

Maintaining a high signal-to-noise ratio in guardrail enforcement is vital. If the system cries wolf too often (false positives), users lose trust or get frustrated; if it misses actual wolves (false negatives), the whole safety purpose is defeated. Some best practices to improve accuracy include:

- Multi-Modal and Redundant Checks: Relying on one single mechanism can be brittle. Many deployments use redundant checks of different types so that one can catch what another misses. For example, combine a keyword filter with an ML classifier – the classifier can handle nuanced cases, while the keyword list provides a backstop for any explicit slurs or known disallowed phrases that should never appear. If the classifier is unsure, the system could fall back to a second opinion (even using the main LLM to double-check its own output for compliance). This diversity of checks makes the overall system more robust to evasion.

- Tuning and Testing: Calibrate guardrails using real data. Before full production rollout, run a large set of representative prompts (both innocuous and adversarial) through the system to see how the guardrails perform. The Unit42 comparative study, for instance, methodically tested 100+ malicious prompts and noted the false negative rates of each platform. Enterprises can conduct similar evaluations on their own models. Use the results to adjust thresholds – e.g. if too many safe queries about “bash scripting kill process” are blocked due to the word “kill,” refine the policy. Red-teaming is an ongoing test method: periodically, security teams or external experts attempt to jailbreak the system with new techniques, and any successful bypass leads to guardrail improvements (be it adding a detection for the new pattern or strengthening the model via training). Leading organizations treat prompt-injection and content safety testing as part of regular QA, akin to penetration testing in traditional software.

- User Experience Design: Sometimes a guardrail will inevitably trigger on a user’s request or response. How this is handled affects user trust. Best practice is to be transparent and graceful: for example, instead of the AI simply failing silently or giving a generic error, provide a friendly message like “Sorry, I can’t assist with that request” (for disallowed prompts) or a mild apology if part of the answer was omitted for safety. Some systems even explain at a high level: e.g. “This content has been removed because it may contain sensitive information.” Such feedback can be helpful. However, one must be careful not to reveal too much (e.g., saying exactly which rule was broken could aid attackers in learning how to circumvent it). It’s a balance between transparency and maintaining security. In internal enterprise tools, being more explicit is often fine (developers might get a note like “Blocked output – reason: potential HIPAA violation (PHI detected)”), which also serves as feedback for them to refine their queries or data input.

- Continuous Improvement via Analytics: Use analytics to track guardrail performance over time. Metrics like block rate, false positive rate (perhaps estimated via user appeals or overrides), and incident count (times harmful content reached user) can be very informative. If a spike in incidents occurs, it may correspond to a new kind of attack surfacing in the wild – prompting an update. Many enterprises set up dashboards for guardrail metrics. Fiddler’s platform, for example, monitors “risky prompts and responses over time” to provide insights and allow root-cause analysis by the AI risk team. This kind of operational monitoring ensures the guardrails maintain effectiveness as the underlying model is updated or as users find creative new ways to query the system.

In essence, performance and accuracy are treated as first-class objectives for guardrails, alongside safety. The best guardrail is one that the end-user barely notices – it silently does the right thing in the background, only intervening when absolutely necessary and with minimal disruption. Achieving that requires thoughtful engineering, tuning, and iteration.

Guardrails in Resource-Constrained Environments (On-Premises and Edge)

Many organizations, especially those handling sensitive data (finance, healthcare, government), opt for on-premises or edge deployment of LLMs rather than cloud services. Deploying guardrails in these environments introduces unique challenges and opportunities:

- Lightweight and Efficient Models: Edge or on-prem deployments often cannot run gigantic models due to limited GPU/CPU resources. The guardrail components therefore need to be optimized for low resource usage. Open-source efforts have emerged to fill this need. For example, Meta’s PromptGuard classifier (86M parameters) can be self-hosted and integrated with open LLMs like Llama-2 to provide a basic safety net without cloud services. Similarly, the Llama Guard project (open-source) provides content moderation models (like a 7B distilled model) that developers can run locally. When using such models, quantization and model distillation techniques help – compressing models to 8-bit or 4-bit precision can allow them to run on CPUs or small GPUs common in edge devices. NVIDIA’s NeMo Guardrails is explicitly designed for flexible deployment: its default guardrail models (for toxicity, etc.) are relatively small and fine-tuned for the task to reduce latency, making them practical to include in on-prem microservices. The ability to plug in third-party tools like Microsoft’s Presidio (an open-source PII detection library) provides additional lightweight options for privacy filtering without heavy ML overhead.

- On-Prem Privacy Advantages: Running guardrails on-prem can actually enhance compliance – none of the data or prompts need to leave your environment. For organizations worried about GDPR or HIPAA, this is a big win. In fact, smaller domain-specific LLMs on-prem are seen as a trend that will improve security posture. Gartner predicts that by 2027, half of enterprise GenAI models will be domain-specific, often deployed in private. These models, by being narrower in scope, “enable strict access controls, compliance with industry regulations, and adherence to standards like HIPAA or GDPR” while reducing attack surface. In practice, an on-prem LLM setup can have a strict data firewall: e.g. it might include a guardrail that anonymizes or masks PII at ingestion (before even the LLM sees it), ensuring no raw personal data is processed. TrueFoundry’s on-prem guardrail gateway does exactly this, “automatically detect[ing] and anonymiz[ing] personally identifiable information before it leaves the system,” preventing accidental exposure of emails, SSNs, etc., and helping comply with privacy laws.

- Hardware and Deployment Strategies: In resource-constrained settings, clever deployment can make a big difference. Some strategies include: running guardrail checks on a separate thread or even a separate device (e.g., have a small GPU for the safety model and a larger one for the main LLM, so the two run in parallel). If the edge device is extremely constrained (like a mobile phone), offloading certain checks to a nearby server or a more capable device might be necessary – though this reintroduces some network dependency. Containerization and orchestration (Kubernetes, etc.) on-prem can ensure that guardrail microservices scale out under load; for instance, if a flood of requests comes in, additional instances of the moderation model can spin up to handle it, thereby maintaining throughput without increasing latency too much. Some enterprise solutions (Fiddler, Microsoft Azure Stack, etc.) offer VPC-deployable guardrail services, meaning the same safety infrastructure available in cloud can run in an isolated on-prem cluster. Fiddler advertises that its Guardrails can be deployed even in air-gapped environments with all data staying local – a critical requirement for industries like defense or healthcare that often prohibit any external connectivity.

- Edge Use Cases and Optimization: On the edge (e.g., AI running in a car or on an IoT device), real-time performance is paramount and connectivity may be limited. Here, rule-based guardrails regain attractiveness due to their speed and low footprint. A set of regex and keyword checks for known bad content can execute in milliseconds on a CPU. If using a model, something like a tiny 5MB TensorFlow Lite model for objectionable content detection could be deployed. Additionally, edge scenarios might utilize network-level guardrails – for example, a corporate network appliance (firewall for AI) that scans LLM API traffic for sensitive data patterns, blocking anything disallowed from leaving the local network. This is a coarse but effective measure to stop large-scale data exfiltration via prompts or responses.

Deploying guardrails outside the cloud often means trading scale for control. One has to be more frugal with model sizes and computations, but gains the ability to tightly govern data and compliance. Many vendors are now offering hybrid solutions – e.g., running the main heavy LLM in the cloud, but doing on-prem preprocessing (masking PII) and postprocessing (result filtering) locally. That way, sensitive information is stripped before it ever goes to the cloud model, addressing privacy concerns. Each organization must assess its requirements: highly regulated industries lean towards fully self-contained (on-prem LLM + on-prem guardrails), whereas others might accept a cloud model if guardrails ensure no secret data is sent. Either way, the guardrail concept extends equally to local deployment, with a strong focus on efficiency and privacy.

Ensuring Compliance with GDPR, HIPAA, and Other Regulations

Regulatory compliance imposes specific guardrail needs, from preventing personal data leaks to auditing model decisions. Guardrails are a key tool for making LLM deployments meet legal obligations in 2024–2025:

- Personal Data Protection: Privacy regulations like GDPR (Europe) and HIPAA (USA health) mandate safeguarding personal identifiable information (PII) and protected health information (PHI). LLM guardrails therefore often include PII detection and masking features. As noted, enterprise solutions can automatically redact or pseudonymize PII in model inputs/outputs. For instance, if a user prompt to a medical assistant contains a patient name or phone number, a guardrail can replace it with a placeholder before the prompt is processed (or instruct the model not to include such details in its answer). This reduces the risk of violating data-minimization principles. Microsoft’s Presidio library (integrated in some guardrail frameworks) can identify over 100 types of sensitive entities (names, addresses, medical record numbers, etc.) for this purpose. Ensuring that no sensitive data is inadvertently logged or used for training is also critical – many guardrail systems will scrub logs or at least provide configuration to exclude sensitive fields from any persistent storage. These measures help comply with rules like GDPR’s requirement to minimize stored personal data and to honor user requests for data deletion.

- Policy Enforcement and Audit Trails: Regulations often require that AI systems adhere to certain standards (e.g., no discrimination, fairness in lending decisions, etc.) and that organizations can audit their AI’s decisions. Guardrails contribute here by enforcing content and usage policies consistently and keeping records. For example, if company policy (or law) says an AI advisor should not provide financial investment recommendations beyond factual information, a guardrail can detect when advice is being given and block or modify it, thus keeping the system in compliance with financial regulations. Each such intervention can be logged. Allganize emphasizes “comprehensive audit trails” for on-prem AI, meaning every prompt/response and any guardrail action taken is recorded for review. If a regulatory inquiry occurs or an end-user complains about AI output, these logs allow the organization to demonstrate due diligence – showing what was asked, how the AI responded, and how the guardrails acted. In highly regulated domains, having a human-review loop for certain categories is also wise: e.g., if an LLM is about to output something that might be legally sensitive (like medical advice), the guardrail could flag it for a person to approve. This kind of setup aligns with HIPAA’s idea of not disclosing PHI without proper authority, etc.

- Right to Erasure and Data Residency: GDPR gives users the right to have their personal data erased. A challenge with LLMs is that conversation logs or fine-tuning data might contain such personal data. Guardrails can assist by segregating and managing data – for instance, storing personal data tokens separately or only processing them transiently. Some organizations choose to not log full conversations, or they systematically mask personal identifiers in logs, making it easier to comply with deletion requests (since the data was never stored in identifiable form). Additionally, some guardrail tools enforce geolocation rules: if an LLM is used in the EU, a guardrail might block any attempt by the model to output certain classes of sensitive EU-defined personal data or to ensure that data stays within certain boundaries. This isn’t a direct language safety issue, but a governance one – nonetheless, guardrails at the application layer can route requests to different endpoints depending on region, etc., to maintain data residency requirements.

- Ethical and Bias Guardrails: Compliance is not only about privacy; it can also involve ethical AI considerations which increasingly find their way into guidelines and laws (for example, upcoming EU AI Act categories for unacceptable use). Guardrails can enforce ethical use policies by detecting biased or toxic language (preventing violations of anti-discrimination laws) and by ensuring transparency. An interesting development is guardrails that enforce explanations: e.g., requiring the LLM to provide sources for certain statements or a disclaimer when it’s giving a medical opinion (to comply with guidelines that AI should disclose it’s not a human professional). These can be seen as guardrails on the style/format of output to meet compliance and transparency standards. For instance, a guardrail could append: “This answer is generated by AI and not a licensed doctor” automatically in a healthcare chatbot’s responses, if that is required by policy.

- Certified Models and Tools: Some commercial LLM services have started obtaining compliance certifications (HIPAA compliance programs, SOC 2 for security, etc.). However, if an enterprise uses them, the onus is still on the enterprise to configure and use them in a compliant manner. Guardrails are part of that configuration. For example, Azure’s OpenAI service comes with content filtering and offers customer the ability to set which categories to block entirely vs. just log. AWS’s Bedrock Guardrails include privacy controls out of the box, reflecting that enterprises want turnkey compliance support. Knowing the limitations is important: as mentioned, an independent study found Azure’s Prompt Shield could be bypassed by ~72% of malicious inputs in tests, so relying solely on it might not meet a strict interpretation of “secure deployment.” Organizations dealing with sensitive data might layer additional in-house guardrails or use multiple filters (defense-in-depth) to reach the needed confidence level that no violation will occur.

In summary, guardrails act as the compliance safety harness for LLMs. They enforce privacy by scrubbing sensitive info, enforce policy by filtering prohibited content, and support auditability through logging and consistent behavior. As regulations evolve (like data protection, AI transparency laws), we can expect guardrails to incorporate more features addressing those (for example, automatic documentation of why an AI gave a certain answer could be a future guardrail to meet Algorithmic Transparency requirements). For now, the combination of privacy filters, content controls, and audit logs forms the backbone of using LLMs in regulated environments.

Comparison of Guardrail Solutions (Academic & Commercial)

Both academic researchers and commercial providers have developed various guardrail solutions by 2024–2025. The table below compares a selection of notable approaches, highlighting their deployment context, key features, and known limitations or gaps as reported in literature and user feedback:

| Guardrail Solution | Deployment | Approach & Features | Known Gaps / Limitations |

| OpenAI Built-in Guardrails (GPT-4, etc.) | Cloud (OpenAI API) | Alignment: Extensive RLHF training to refuse disallowed queries. Content Filter: External Moderation API classifies outputs into categories (hate, self-harm, sexual, violence, etc.) and blocks policy violations. | Tends toward cautious behavior, which can flag benign content (e.g. coding queries mistaken as exploits). Still vulnerable to cleverly phrased jailbreaks (some indirect requests bypass filters). Requires sending data to OpenAI servers (data privacy concerns for some). |

| Azure OpenAI “Prompt Shield” | Cloud (Azure) | Content Filtering: Similar categories as OpenAI (built on OpenAI’s tech), with adjustable strictness. Integrated into Azure’s GenAI service with logging and an optional human review tool. | Research showed substantial false negatives – e.g. ~72% of malicious prompts bypassed Azure’s guardrails in one test. Limited public info on custom rule capability (beyond category toggles). Cloud-only (enterprises must trust Microsoft with data, though Azure offers compliance guarantees). |

| Google Vertex AI Safety | Cloud (Google) | Safety Filters: Multi-category output moderation (toxic language, violence, etc.) and tunable safety settings. Prompt Guidelines: Allows developers to set high-level “don’t talk about X” parameters. | Efficacy varies; likely faces similar issues as others (some false refusals, and jailbreaking exploits not fully solved). Multilingual support is still a challenge (Google’s filters can miss non-English toxicity). Primarily cloud, which may not satisfy data residency needs. |

| Anthropic Claude (Constitutional AI) | Cloud (Anthropic API) | Intrinsic Alignment: Model follows a built-in “constitution” of ethical principles to avoid harmful or biased outputs. Less reliance on external filters – the model self-polices by generating a critique and revision if an output violates its rules (per training). | Generally produces safe outputs, but not immune to jailbreaks – determined attackers can still coerce it with complex strategies. Customization of the constitution by users is limited (cannot easily inject entirely new company-specific rules at runtime, beyond instructive prompt). |

| NVIDIA NeMo Guardrails | Open-Source / On-Prem or Cloud | Toolkit: Provides a microservice that intercepts prompts/responses and checks them using either the main LLM (self-check prompts) or dedicated NIM models (small models fine-tuned for safety tasks). Built-in NIMs: Content Safety (23 unsafe content categories), Jailbreak Detection, Topic Control. Also supports plugging in third-party detectors (e.g. regex scanners, Presidio for PII). Supports streaming moderation for low latency. | Provides powerful framework but requires integration effort and tuning. Underlying ML models (e.g., Meta’s classifier for jailbreaks) can be circumvented by adversarial input obfuscation (as seen with Prompt-Guard’s failure on spaced text). Keeping the guardrail models updated with new attack data is a manual task. |

| Meta’s Prompt-Guard (open-source) | Open-Source (HuggingFace) | Classifier Model: 86M-parameter transformer fine-tuned to detect high-risk prompts (injections, jailbreaks). Released with LLaMA-3.1 for developers to integrate into custom pipelines. Can be run locally due to small size. | Bypassable via simple text perturbations – e.g. adding spaces between characters completely fooled it. This highlights a general limitation of static classifiers against adaptive attacks. Meta is working on fixes, but it shows how attackers can target the guardrail model itself. |

| Fiddler Guardrails (Trust Service) | Enterprise (Cloud or On-Prem) | Multi-metric Scoring: Uses a suite of proprietary Trust Models to score each prompt and response on dimensions like toxicity, violence, PII presence, hallucination, prompt injection, etc.. Very fast (<100ms per request) and scalable, handling millions of queries/day. Customizable: Security teams can set threshold policies (e.g. block if toxicity > X) and deploy the system in a private VPC. Integrated monitoring dashboards for analyzing violations over time. | New as of 2025, so independent evaluations are limited. Relies on Fiddler’s own models – if those have blind spots, sophisticated attacks may still get by (the Mindgard study did not specifically name Fiddler, but similar systems were evaded by unicode tricks). Being a commercial product, it introduces vendor lock-in and additional cost (though it aims to be cost-efficient via small models). |

| TrueFoundry AI Guardrails | Enterprise Platform (Cloud or Hybrid) | AI Gateway with Guardrails: Sits between users and the LLM. Offers rule-based configuration: admins can mask PII, define disallowed topics, and block custom keywords via a simple interface. Enforces these on both input and output, ensuring consistent policy adherence. Fine-grained controls possible (different rules by user role or context). Focus on data privacy: automatically strips out sensitive data to help meet GDPR/HIPAA obligations. | Mainly rule-based, so effectiveness depends on the thoroughness of the rules provided. Might not catch complex harmful content that doesn’t match specified patterns. Likely needs to be paired with an ML-based filter for nuanced cases (the platform can integrate with external classifiers, but that might be extra work). As with any cloud service, using it means trusting the vendor’s security for the sensitive data it processes (though they emphasize compliance). |

| Protect AI (AI Radar) | Enterprise (Cloud or On-Prem) | Security-Focused Guardrails: Tools aimed at LLM supply chain and security. Includes models to detect prompt injection and data leakage. Offers versions (v1, v2) of a classifier that can be deployed to scan prompts in real-time. Likely provides integration into CI/CD for AI (to catch issues in development). | Earlier versions had substantial weaknesses – Mindgard reports a ~77% injection bypass rate for Protect AI’s initial guardrail model. The improved v2 reduced this greatly (to ~20% bypass), but no system is foolproof yet. Without continuous updates, new exploits (emoji hiding, etc.) could still succeed. Also, some users note these tools can be noisy, requiring tuning to fit their specific application to avoid false alarms (no public data yet on v2 false positive rate). |

| Vijil Dome (Startup) | Enterprise (Cloud SaaS) | Guardrails-as-a-Service: Vijil offers a hosted guardrail API that uses fine-tuned LLMs and custom ML models to evaluate prompts/responses. They boast high accuracy (claiming “up to 2× more accurate than Amazon Bedrock guardrails” per marketing) and cover many known jailbreak scenarios out of the box. Features tests for 15+ DAN (Do Anything Now) style attacks, toxicity, etc., and integrates with monitoring tools (WandB integration for logs). | Independent testing revealed it’s far from impervious – the Mindgard study showed Vijil’s prompt injection detector was circumvented ~88% of the time and jailbreak detector ~92% under certain evasion attacks. This indicates that, like others, it struggles with adversarial obfuscation. Being a third-party cloud service, data governance and latency need consideration (though it likely caches results and is optimized for speed, network latency is present). |

Conclusion

LLM guardrail methods have rapidly evolved in 2024–2025 to address the dual challenge of keeping AI helpful and keeping AI safe. The landscape now includes everything from model-level alignment techniques to external rule-based systems and dedicated safety models, each contributing a layer of defense. We’ve seen that prompt injections and jailbreaks remain a cat-and-mouse game – no single solution stops all attacks, but a layered approach (alignment + input/output filters + continuous testing) makes a significant difference in raising the bar. Likewise, ensuring harmful or policy-violating content is filtered out requires balancing act: too lax and you risk compliance breaches; too strict and you hinder usability. The best practices highlighted – custom policy tuning, feedback-driven refinement, latency optimizations – are all aimed at finding that balance in a production setting.

One clear trend is the move towards adaptive and context-aware guardrails. Static rules or models quickly fall behind as users (and attackers) discover new ways to prompt AI. Future guardrails will likely employ more real-time learning – for example, deploying updates to the safety model based on the latest attack it saw, or using meta-learning to better generalize to novel inputs. Another trend is integrating guardrails deeply into the application stack (as seen with AWS’s built-in guardrail options and Azure’s services), making it easier for developers to adopt safety best practices by default. At the same time, open-source and on-prem guardrail tools give enterprises control and transparency, which is crucial for trust. We can expect ongoing collaboration between academia and industry in this space: research identifies new vulnerabilities (like emoji smuggling), and industry responds with patches and new techniques, which in turn invites further testing. In conclusion, deploying LLMs responsibly is now recognized as a multi-faceted endeavor. Robust guardrails for prompt injection defense, content moderation, custom policy enforcement, and compliance are all pieces of the puzzle. When thoughtfully implemented, they enable organizations to harness powerful LLM capabilities safely, whether in the cloud or at the edge. By following the methods and best practices outlined above – and staying abreast of the latest guardrail developments – practitioners can significantly mitigate risks while still reaping the benefits of generative AI innovation.

.png)